Hello,

This is a long essay about charlatans and the nature of science. I wrote it some time ago in Russian, and it was even partially published in a Russian-language journal, the link to which I will refrain from openly sharing for pseudonymity reasons. The current English version is approximately twice as long, updated, and unabridged. This is the last part that is supposed to tie everything together. You can find part one here, part two here, and part three here.

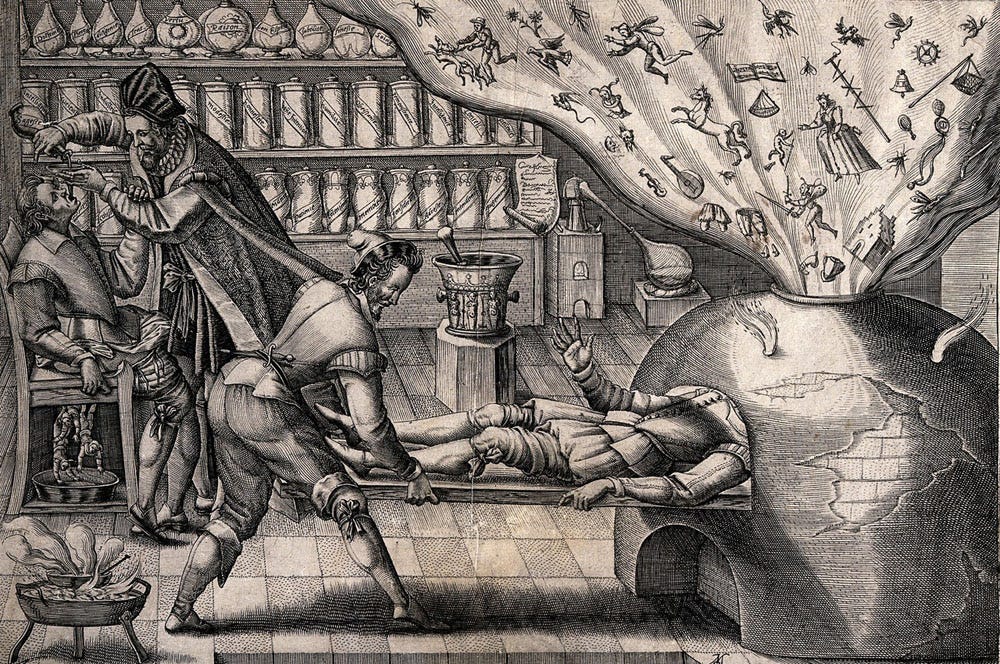

Hyeronymus Bosch, “The Cure of Folly”

***This is a long post that will be truncated in the emails. I highly recommend you click on the title to read the whole 8000-word post without interruption.***

Scientific Charlatans and Where to Find Them, Part 4

“Scientific trust is assumed, but tested.” — Daniel Dennett

5. Science for Real

In the last part of this essay, I will try to explain how to tell a scientist from a charlatan, and, more specifically, what frame of mind you should have when approaching this question. As we saw from examples in previous parts, it is sometimes not as straightforward as one could imagine. To do that, I would need to go a little bit into the bowels of science, first into its history, and then into its philosophy. Hopefully, in the end of this part—the longest one!—you would understand science a little better, and maybe even share my enthusiasm for it.

But since this is Substack, and not a print edition, I can do something fun first, namely, show a video that encapsulates this part perfectly.

5.1 A Bit of History

It is logical to assume that charlatans in science have existed for as long as science itself. But I dare to disagree with this assumption. It seems to me that charlatans are, in fact, older. People have always tried to deceive each other by pretending to be learned men. But real scholars turned into true scientists much later. To explain this admittedly strange statement, I need to delve a bit into the history of science.

To begin with, science—in our understanding of the word—is quite a young thing. It's only about three hundred and fifty years old, no more. All modern science started in the mid-seventeenth century. Before that, there were flashes of reason here and there: Ibn al-Haytham in the tenth century, Leonardo and Copernicus in the mid-fifteenth to early sixteenth, and Hooke, Kepler, Brahe, and Galileo in the early seventeenth are the most vivid examples. But there was no science as such; there were philosophers, sages, mystics, astrologers, alchemists. They existed since ancient Greece. And when people say “the science of Aristotle” or “the science of Leonardo”, I cringe a little and really want to argue with these definitions. I don't think either Aristotle or Leonardo were scientists in the modern sense of the word. The first was a tremendous philosopher, the second—a genius engineer.

Even the word “scientist” first appeared not so long ago. It was first used by the English philosopher William Whewell in 1840. He wrote: “...we are in need of a name to describe someone engaged in science in general. I am inclined to call him a 'Scientist'.” Before that, scientists were called “natural philosophers” or “men of science”.In the mid-seventeenth century, science began to develop. Partly through the efforts of the greats—Newton, Boyle, Pascal, Leibniz, Huygens, Leeuwenhoek, and others—and partly through the support of the powers that be. In 1660, with the blessing of King Charles II, the Royal Society of London, the first (or one of the first) scientific society in the world, was founded. There, the first attempts were made to separate science from philosophy, metaphysics, and religion. This laid the foundation for modern science.

People look at science now and, it seems to me, don't fully understand the colossal path it has traveled over such a short period of time. Very few things develop faster than science. People have been at war with each other for several thousand years—and we haven't progressed much beyond Sun Tzu. Religions have existed for many centuries—and how often do new dogmas appear? Scientific discoveries are made every day. What makes this possible? I believe it's due to a unique balance between accepting new theories and skepticism towards them. Daniel Dennett (R.I.P.) defined it “trust is assumed, but tested”. This is an integral part of what's called the scientific method.

Here is a great quote from Bertrand Russell:

There's an interesting book by E. A. Burtt titled "The Metaphysical Foundations of Modern Physical Science" (1925), which convincingly discusses many unfounded assumptions made by people who created modern science. He rightly points out that in Copernicus' times, facts that would have justified his system were unknown, but there were known facts that spoke against it. "Modern empiricists, had they lived in the 16th century, would have been the first to ridicule the new cosmology." The main goal of the book is to discredit modern science by suggesting its discoveries were happy accidents arising from superstitions as deep as those of the Middle Ages. I think this reveals a misunderstanding of the scientific approach by the author: a scientist is distinguished not by what he believes, but by how and why he believes it. His beliefs are not dogmatic, but experiential. They are based on evidence, not authority or intuition. Copernicus had the right to call his theory a hypothesis; his opponents were wrong to think that new hypotheses are undesirable. (Bertrand Russell, "A History of Western Philosophy")Charlatans, however—liars, blabbermouths, and Tartuffes of science—have always been around. There have always been people ready to explain to others how everything really works. But true scientists are a relatively new phenomenon. It's worth remembering this.

5.2 The Scientific Method

The scientific method, like many other important concepts, is both very simple and very complex at the same time. If we try to define it as concisely as possible, it comes down to this: the scientific method is a set of tools used by scientists to acquire, preserve, and multiply knowledge, determine truth, and formulate new questions. The core of the scientific method consists of several “stages”, each of which might seem trivial. However, these stages, as we know them today, were consicely formulated only in the eighteenth century (initially by William Whewell). These stages (in a simplified form) are:

Asking a question ("I wonder how this happens?")

Gathering existing information ("What is already known about this?")

Formulating a hypothesis ("Perhaps this happens because of that?..")

Making predictions based on the hypothesis ("Therefore, we should also observe those...")

Experimentation and data analysis ("Oh, yikes!")

Drawing conclusions based on the collected data ("So, this happens because of that only under these conditions!")

Publishing results ("Hey guys! Look what I found out!”)

Asking a new question (“But why does this happen because of that only under these conditions?")

This is known as the “hypothetico-deductive model”, which modern scientists more or less follow. These stages can be performed by one scientist or, as is much more common nowadays, by several. A classic example of the scientific method in action is the discovery of the structure of DNA and the explanation of its principal function by Watson and Crick.

In the early 1950s, it was already established that DNA was responsible for the transmission of genetic material, but the mechanism of this transmission and the structure of the molecule were unknown. At that time, there were several different theories — one of them, for instance, belonged to the great chemist Linus Pauling, who believed that DNA had a triple helix structure. Watson and Crick, however, thought that the helix was double and even proposed a mechanism for its replication. Confirmation of their theory would come from a specific pattern of diffraction from a DNA crystal. After Rosalind Franklin obtained this diffraction pattern (the famous "Photo 51"), they were able to confirm their hypothesis and published their findings. Thus, Watson and Crick were responsible for stages 2-4 and 6-7, while Rosalind Franklin and Linus Pauling (who measured the lengths of atomic bonds in the crystal) were responsible for stage 5.One of the main “traps” of the scientific method is that it's impossible to prove a theory—only to disprove it. As Einstein said, “No amount of experimentation can ever prove me right; a single experiment can prove me wrong.” This means that none of the existing scientific theories are absolutely true but merely unrefuted. This is a very important point that confuses many, including scientists themselves. The scientific method has become so deeply ingrained in scientific education and jargon that such things seem too trivial to mention each time. Remember: When a scientist says “this theory is true”, they mean “this theory is closest to the truth among those we know.” This seems natural to scientists. But not always to others.

This leads to a very important conclusion: a scientist is never absolutely—or, more precisely, blindly—sure about anything. A scientist's confidence in certain explanations of physical phenomena is only due to the fact that these explanations have not yet been refuted. And that, admittedly, is often a rather shaky foundation. Of course, it's unlikely that one could refute century-old physical laws—but one can expand them or prove that they only work under certain conditions. The relationship between Newtonian and quantum mechanics comes to mind—the latter didn't refute the former but showed that it has limits. Outside these limits, Newtonian mechanics is insufficient, and quantum effects are observed. Thus, science doesn't discard old laws (after all, the gravitational force is still m*g), but expands and supplements them.

Here is an interesting example. Scientists in Bristol found a material, "purple bronze", which doesn't conform to one of the classical laws of electrodynamics—the Wiedemann-Franz law. Their research was published in Nature. Such a discovery isn't a tragedy or a disgrace for the long-deceased Wiedemann and Franz, nor is it a cause for teeth-gnashing by envious colleagues and supporters of the refuted law. It's an important and interesting fact that will surely lead to amendments to the Wiedemann-Franz law, may lead to interesting technological innovations, and has already led to a slightly better understanding of the world we live in.A more historical example of the application of the scientific method was described in a Russian journal, “Science and Life”, and I decided to translate it here due to its horrifying relevance to the modern world.

"An outstanding English biologist and evolutionist, a contemporary of Charles Darwin, Alfred Russel Wallace, was an active fighter against pseudoscience and various superstitions. In January 1870, Wallace read an advertisement in a scientific journal, in which the advertiser offered a bet of 500 pounds sterling to anyone who could visibly prove the sphericity of the Earth and "demonstrate by a method understandable to any reasonable person, a curved iron railway, river, canal, or lake." The bet was offered by John Hampden, author of a book arguing that the Earth was actually a flat disc.

Wallace decided to accept the challenge and chose a straight section of canal six miles long to demonstrate the Earth's curvature. There were two bridges at the beginning and end of the section. On one of the bridges, Wallace set up a perfectly horizontal 50x telescope with reticle wires in the eyepiece. In the middle of the canal, three miles from each bridge, he placed a tall marker with a black disc on it. A board with a horizontal black stripe was hung on the other bridge. The height above water of the telescope, black disc, and black stripe was exactly the same.

If the Earth (and the water in the canal) were flat, the black stripe and black disc should align in the telescope's eyepiece. However, if the water's surface was convex, mirroring the Earth's curvature, then the black disc would appear higher than the stripe. And that's exactly what happened. Moreover, the degree of discrepancy closely matched the calculation derived from the known radius of our planet.

However, Hampden refused to even look through the telescope, sending his secretary for this purpose instead. The secretary assured the assembled crowd that both markers were at the same level. Any discrepancy observed, he argued, was due to aberrations in the telescope's lenses. This led to a lengthy legal battle, which eventually forced Hampden to pay the 500 pounds, but Wallace spent significantly more on legal expenses."

Yuri Frolov, "Science and Life" No. 5, 2010A similar experiment was performed by documentarian Dan Olson on beautiful Lake Minnewanka. You can watch the video here.

5.3 Demarcation and Falsifiability

Any discussion about science, even at an amateur level, would be incomplete without these two concepts, so I'll briefly explain them. Demarcation is an attempt to distinguish science from “non-science”, and falsifiability is a demarcation criterion proposed by Karl Popper, whom we've already mentioned. Now, in a bit more detail.

Why is it necessary to draw boundaries between science and non-science? Why not lump everything together? Indeed, many people believe that this distinction is unnecessary or even harmful. One reason for the emergence of the demarcation problem is historical. In the seventeenth century, it became necessary to draw a line between science and religion—mainly to maintain peace. At some point, it was necessary to ensure that scientists did not encroach on the domain of the church—creation of the world, biblical miracles, etc.—and that the church, in turn, did not try to control, prohibit, or refute physical discoveries. The directive, so to speak, came “from the powers that be”—for example, the King of England, Charles II, greatly facilitated the creation of the Royal Society of London in 1662, apparently just to prevent scientists and clergy from fighting. Scientists were allowed to study everything that could be demonstrated. For example, Robert Hooke showed experiments with springs and elastic bodies (and later microscopy and living cells and much more), and Robert Boyle demonstrated the phenomenon of vacuum, his work with gases, and other thermodynamics. Scientists tried not to delve into “higher realms”, leaving that to philosophers and theologians. This later became one of the main demarcation criteria—the need to experimentally verify theories.

Here it is necessary—essential!—to clarify the difference between a theory and a hypothesis. You'll be surprised how often people confuse these two concepts. A hypothesis is an unproven statement. It can be any guess, assumption, insight, etc. A hypothesis can be plucked out of thin air. It can be derived from sacred texts, or it can be birthed by a half-asleep student during a seminar. A theory, in the "scientific" sense of the word, is a series of logical conclusions reflecting objective reality. A theory is a kind of forecast based on objective, real data. Scientific theories are formulated, developed, and tested in accordance with the scientific method. Theories, as we've already discussed, cannot be proven but only refuted. Hence, the theory of evolution and the theory of relativity are theories. This doesn't mean there are no facts supporting them. On the contrary, it means there are no facts refuting them.

Thus, every theory is a hypothesis, but not every hypothesis is a theory. For an example, let's look at two statements: Statement A, "The Earth is flat", and Statement B, "The Earth is and ellypsoid that rotates around its axis". In modern times, Statement A is false, while Statement B is a fact because we can now see this with our own eyes. But in Galileo's times, both statements were hypotheses, as there was no way to directly verify them. Statement B was also a plausible theory that Galileo adhered to. It was based on a) the concept of the horizon and observations of ships at sea; b) travels of explorrs, c as Magellan, and c) the phenomenon of tides. (We now know that the third point was incorrect—tides are caused not by the rotation of the Earth, but by the Moon,—but at the time, only the genius Kepler thought so. This does not diminish Galileo's greatness.)

In fact, the distinction between the concepts of theory and hypothesis is even deeper (a theory is a "unit of science," a "set of propositions of some artificial language," etc.), but here I start to "flounder" with terms. Go to Bertrand Russell; he knows for sure.The second, philosophical reason for the emergence of the demarcation problem is related to epistemology, the division between "knowledge" and "belief," and other logical positivism. I won't dwell on this—the topic is quite complex and not easily grasped offhand. But it's interesting, so I refer the curious to Bertrand Russell's “History of Western Philosophy” (where the origins of concepts are clearly explained), or any comprehensive textbook on the philosophy of science. I'll only briefly touch on Popper's criterion of falsifiability, and even then, I'll try to make my account more anecdotal than dogmatic.

In the 1920s, a philosophical circle emerged in Vienna, aptly named “The Vienna Circle”. It was a gathering of some of the brightest minds of the era, who discussed various significant issues, including matters of science. It was here that the philosophical movement known as logical positivism was born, which advocated for an empirical (i.e., experimental) approach to knowledge acquisition. Their criterion for “scientificness” was verifiability; anything that could be tested was considered scientific, whereas matters that couldn't be tested (like the existence of God) were deemed questions of faith. The Vienna Circle thrived with its criterion of verifiability until Popper came along and ruined everything.

He essentially said, “Allow me, gentlemen. You're confusing apples with oranges—’meaningful theories' with 'scientific theories.' Most scientific theories, based on empirical experience, cannot be proven. Take a simple observation: there are only white swans in the Vienna Zoo. Based on this observation, let's propose a theory: all swans are white. Obviously, it's empirically unprovable. To prove it, one would need to catch all the swans in the world, past, present, and future, and check if they are all white. However, to disprove it, finding just one non-white swan is enough. Wouldn't it be simpler to make refutability, not verifiability, the criterion for 'scientificness'?” Thus, according to Popper, a scientific theory is one that can be refuted. Note, this says nothing about the quality of the theory—its accuracy, sensibleness, logic, etc. Scientificity is not synonymous with these concepts but is just another criterion. Why is it needed? See above.

Popper also provided more significant examples than the swan anecdote. Take Einstein's theory of relativity (it was widely discussed at the time). Is it scientific? Of course—one can devise an experiment that could refute it. Such an experiment was conducted by Arthur Eddington—and it did not refute the theory. Is it true then? Yes, until proven otherwise. Take Jung's theory of light propagation through ether. Is it scientific? Certainly. Is it true? No—it was refuted by the Michelson-Morley experiment. Take Freud's psychoanalysis theory. Is it true? Ask your therapist. Is it scientific? According to Popper, no—there is no human behavior it couldn't explain. Hence, there's no experiment that could refute it. Look for an example of a non-scientific and untrue theory on your own.If you're still—quite reasonably—wondering why all this fuss about demarcation is needed, consider this perspective. Science can—and should!—be trusted. It sometimes errs, sometimes goes off the beaten path, and sometimes gets obsessed with something not so important—but at least it strives to uncover the truth. Not the “Truth” with a capital T and in quotes, but just the truth. About the world we live in. Charlatans can't be trusted; they have different goals. To help us distinguish the former from the latter, hectares of forest have been chopped down to become books on demarcation.

To sum up everything mentioned above, the main criteria for a sound scientific theory are testability by experiment and falsifiability. Besides, theories are valued for their reproducibility of results, consistency, relevance, generality, succinctness, compatibility with existing theories, and the ability to “adjust” to new data. Certain problems are associated with the last point, which will be discussed shortly. But first, a few words about scientific publications.

5.4 Publish or Perish

A reasonable question that one can find popping up here and there, especially after the COVID pandemic, is: “So what, only those who publish in certain specific journals, can be considered scientists?” And indeed, why this gatekeeping? Why must one be “in the system”, in this (surely) corrupt mechanism, to be considered a scientist? Why, look, Professor Chudinov published fifteen volumes at his own expense, yet no one wants to acknowledge it as science? In this part, I'll try to briefly answer all these “whys”, explain what “peer-reviewed journals” are, and roughly describe the journey of a scientific publication from the moment it's written to when it's printed.

First off, it's crucial to understand that getting published in a peer-reviewed journal is a significant achievement for a scientist. Each article is a cause for celebration, not a routine event like, say, for journalists or flash fiction writers. The reason is that publishing an article signifies not just that a number of people will read it. It's also a form of recognition, an affirmation that yes, this work is genuinely scientific, and it's worthy of publication. And this recognition comes not from some outsider but usually from several prominent, serious scientists, experts in the field.

“Peer-reviewed” means exactly that every article must be read, approved, and reviewed by several individuals capable of understanding and assessing the scientific value of the article before publication. This system, despite its flaws, successfully filters out an unimaginable amount of nonsense, lies, and bad science. Here's how it works.

Suppose you've conducted new scientific research and want others to know about it. You must choose, from hundreds of scientific journals, the one most relevant to your study's theme. Then you must write an article according to the journal's requirements, which could be anything from length restrictions to the number of images, etc. Afterward, you send off the article and wait. The article lands on the desk of the journal editor—usually a distinguished scientist. They might outright reject it (if it's complete nonsense) or send it for peer review. If you're lucky, the article is distributed to reviewers—usually people who have already published several articles on the topic. Reviewers can do anything with the article. They can reject it outright, accept it immediately, or return it to the author with requests for corrections or additions (i.e., to conduct necessary experiments omitted by the author). The revised and corrected article is then sent back to the reviewers, and the process repeats. Since there are usually multiple reviewers (typically three), opinions can diverge. In such cases, more reviewers are appointed, and the whole process starts anew. And this happens with every submitted article. It can take months, even years, from the moment an article is written until it's published.

This lengthy and laborious process serves one single purpose: to avoid publishing crap. Reviewers' names are kept confidential from the author, making bribery unlikely. Reviewers work voluntarily, scientific editors often do too, the author normally doesn't pay for publication, nor do they receive any payment. Compare this process with “went to a random publishing house, paid their fee, printed anything”, and you'll understand why the question “Where are your articles published?” is the first one to ask any charlatan. In ninety percent of cases, the answer is enough to realize you're dealing with a charlatan.

Please note that I am describing the platonic version of the peer-review process—how it should be in an ideal situation. Reality is always more muddled. The crap-filter of peer review is far from infallible. The critique of the peer-review process, in my opinion, is absolutely warranted and even required, but falls outside the scope of this essay. For a harsh, but fair example, I highly recommend

‘s recent essay “The Rise and Fall of Peer Review" or some of his other work.Anonymous, “Doctor Wurmbrandt, der im gantzen Land, überall bekannt”

5.5 Deromantisizing Science

Charlatans are appealing because they promise quick and substantial results. It's tempting to believe them, right? After all, at least in common perception, that's how science seemed to progress in the “good old days”. Hop!—Newton's first law, hop!—the photoelectric effect, hop!—we're off to space. Where are our great discoveries, one might ask? It's a valid question, but it can only come from someone who doesn't quite understand how science works.

Having been showered with new discoveries as if from cornucopia, people have grown accustomed to thinking that science owes them something, and immediately, please and thank you. But that's not the case. Science is a long-term investment. You can't just pay a scientist a lot of money and “order” a great scientific discovery. Similarly, you can't just give a writer a generous advance and commission a brilliant novel (although many try). The most you can do for both is to provide optimal working conditions. Most often, given the right abilities, the result will be good, solid work; sometimes a complete failure; and sometimes a masterpiece. In science, as in art, inspiration is key.

A scrupulous reader will, of course, note that scientists typically need much more money than writers. For pen and typewriter workers, supplying paper and morning coffee is enough, but scientists need tools, materials, grants, conferences and, what's utterly beyond the pale, students. But it's worth remembering that, with a favorable outcome, the results will be corresponding—and even the same writers will be able to get new electronic paper and a nano-coffee maker with pleasant music and vanilla aroma.

Nonetheless, many note that, supposedly, great discoveries were once made at every turn, but now they seem to have dried up and diminished. This is both true and false. On one hand, expecting someone to discover, for example, a new fundamental law of physics is indeed unreasonable nowadays. Current findings tend to be more about adjustments and refinements to already known laws. In this sense—yes, discoveries have become more “minor”. But this isn't due to modern scientists' inability to dig deep, as is often explained, but merely a measure of the depth of the hole that has already been dug.

When we learn to walk, each step—especially the first—is a great achievement. But by the age of three or four, we're running around the apartment, not noticing the steps we take. The next big leap comes only at around seven—when we first ride a bike by ourselves. Then, at eighteen, when we get our driver's license (timings may vary depending on the country you’re currently in). This is natural for us, and we don't rush things, we’re not disappointed that twelve-year-olds are still barely wobbling on their bikes and not rushing to get behind the wheel. Nor do we complain that at eighteen, every step we take is no longer considered an achievement. The same applies to science.

Charlatans often try to hide behind the grand names of the past, hitching a ride on the romance of it all. Are we missing great discoveries? Well, here you go, a new Leonardo, a new Galileo, Einstein, and Newton all in one! It's pleasant to believe that our generation can produce geniuses. In reality, it does—just of a different formation. The era of chivalry, the romantic age of science, has, for better or worse, passed. That's why there are almost no “new Leonardos”, “new Newtons”, and “new Einsteins”—and there will likely be fewer and fewer. Expecting otherwise would be as absurd as longing for great military heroes of the past and expecting new Herculeses, new Rolands, and new Lancelots. Our time is the time for scientific generals. But they, as befits generals, stay in their headquarters and don't show their faces. And people who appeal to grand names are most likely scientific quacks. Nostalgia for the “good old days” shouldn't lead to denying that those times are behind us.

Another reason we don't notice great scientific discoveries is that we don't give them due importance. Simply because we lack the knowledge to understand them. But that's natural—school and even university education isn't built on contemporary, cutting-edge science. The percentage of Einstein's contemporaries capable of fully appreciating his contribution to science was very, very small. We simply won't be able to understand today the people who will be featured in future textbooks. But our children and grandchildren will. And our gray and boring scientific routines will seem devilishly romantic to them. Also because of the benefits they will bring.

"How does your research benefit humanity?"

The English Prime Minister in the mid-19th century asked Faraday a similar question when he visited his laboratory and saw Faraday moving a wire in a magnet: “What are you doing here? What practical value does it have?” To which Faraday smiled and said, “Dear Prime Minister, a time will come when you will tax this effect!” 5.6 Ideology in Science

For a change, I'll start with a quote. Here's an excerpt from the infamous "August Session” of the Lenin All-Union Academy of Agricultural Sciences of 1948, titled "On the Situation in Biological Science." The speaker was Trofim Lysenko.

[...] We, the Michurinists, must openly admit that we have not yet fully utilized the wonderful opportunities created in our country by the Party and the Government to fully expose Morganist metaphysics, entirely imported from the hostile foreign reactionary biology. The Academy, having just been replenished with a significant number of Michurinist academicians, is now obliged to fulfill this most important task. This will be crucial in training personnel and strengthening the support of collective and state farms by science.

Morganism-Mendelism (the chromosomal theory of heredity) in various forms is still taught in all biological and agronomic universities, while the teaching of Michurinist genetics is essentially not introduced at all. Often, even in the highest official scientific circles of biologists, followers of Michurin and Williams were in the minority. Until now, they were also in the minority in the previous composition of the All-Union Academy of Agricultural Sciences named after V.I. Lenin. Thanks to the care of the Party, the Government, and personally Comrade Stalin, the situation in the Academy has drastically changed. Our Academy has been replenished and will soon be further enriched with a significant number of new academicians and corresponding members - Michurinists. This will create a new environment in the Academy and new opportunities for the further development of Michurin's teachings.

It is absolutely incorrect to claim that the chromosomal theory of heredity, which is based on sheer metaphysics and idealism, has been suppressed until now. On the contrary, the situation has been the opposite [...]Let me remind you of the result of Comrade Lysenko's ideological work. In short, Soviet genetics was simply destroyed. This led to the repression of scientists, the arrest, and the death of N. Vavilov, one of the leading Soviet biologists. This happened in the 1940s. In the early 1950s, Watson and Crick deciphered the structure of DNA and explained the mechanism of gene transmission—the very "chromosomal theory" that Lysenko vehemently fought against. The long-term outcome is heartbreaking: despite the impressive achievements of Soviet scientists in physics and chemistry, Soviet biologists lagged behind their Western counterparts by many, many years. Regrettably, outstanding Soviet molecular biologists can be counted on one hand, and the entire (very young) field of science suffered a severe blow. Not to mention the significant impact on the Soviet economy due to the use of “ideologically correct science” in industrial applications.

Regarding the great Russian scientist Ivan Michurin, who had already passed away by then, "Michurinist agrobiology" has no more to do with him than torsion fields have to do with mathematician Élie Cartan. Michurin himself—a world-renowned biologist and one of the pioneers of biological selection—never created a general biological system (like Michurinist genetics) and never absolutized the influence of the environment on heredity. Towards the end of his life, Michurin agreed with Mendel's teachings and claimed that his experiments, intended to refute Mendel's laws, actually confirmed them.Physics and chemistry under Soviet rule were also subject to ideological interventions, but to a lesser extent. For example, similarly to Nazzi Germany, there were attempts to reject the “ideologically incorrect” quantum mechanics and the theory of relativity, but fortunately for all Soviet science, the main priority at the time was the creation of the atomic bomb. Lavrentiy Beria, who oversaw the project, said, “We won't let these scoundrels interfere with the work”, and the ideological debate in physics was suppressed.

These and other examples highlight the danger—even the inadmissibility—of using ideology instead of the scientific method. In general, it's worth mentioning the interference of authority in science. Unfortunately, science cannot fully abstract itself from authority—it's a matter of finances. Nonetheless, there is no division of science into “ideologically correct” and “incorrect”, into “ours” and “yours”. Science is science. And anyone who claims otherwise is a charlatan. In sciences with a stronger evidential base (physics, chemistry), such examples are less common, but they do occasionally slip through.

So, to what extent should authority—and through it, ideology—influence real science? The answer is very simple: it can and sometimes should control not the scientific discoveries themselves but the directions in which science moves. And this control is needed primarily for the efficiency of the entire system. Ideally, the state acts as the navigator of science. America needed the atomic bomb—budget funds were allocated, brilliant scientists were found, several of the most important scientific discoveries were made along the way, and an invaluable contribution was made to physics. If the state is concerned about conserving natural resources and the environment, funds are allocated to alternative energy sources and “green” science. If the state is interested in more efficient use of available resources—voilà, science focuses on oil purification and coal processing.

The state, of course, can also allocate funds to utterly senseless and hopeless scientific directions (not to mention following outright charlatans), thereby harming both itself and science. This only shows that the balance between the two is very important and very fragile, and those in power must understand science to a degree sufficient to make such decisions. Unfortunately, this is not always the case.

5.7 Science and Religion

This is a delicate topic. If you recall, the first scientific society was established precisely to allow curious individuals to engage in science without interference from the church. The “curious individuals”, on their part, promised not to delve into the global questions of existence and to focus on the earthly and simple: for example, which falls faster, a feather or a kettlebell? And what if we evacuate the air? Science was “conceived” as a way of understanding the world, an alternative to religious dogmas. A method to uncover the truth not by burying oneself in volumes of ecclesiastical texts but by studying the surrounding world with open eyes.

Since then, much water has flown under the bridge (and a lot of air has been evacuated). Religion gradually gave way, science grew in importance and size, and the inevitable—and very frightening—happened. In the eyes of people who don't understand science, it turned into just another religion. Think about it: they do look very similar on the surface. Both science and religion require long preparation and training. Both are pursued in places somewhat isolated from the surrounding world (monasteries and universities). Both have their own specific jargon and languages, often inaccessible to the general public. In the end, even a lab coat is only distinguished from acolyte’s ’s robes by its color scheme. How can one not get confused? It's no wonder that comparisons of scientists to priests and Pharisees occasionally pop up among the general public.

—Are you Catholic?

—No, a physicist...A way around this comparison, is to imagine that science and religion are heading in opposite directions. Religion knows everything a priori—there are sacred texts, scriptures, canons. Religion goes from initial, axiomatic knowledge to current events. Any event can be explained from the perspective of already-known religious laws. Science initially knows nothing and moves in the opposite direction—from current events to accumulating some knowledge about the world. From these events, one can draw conclusions about the existing laws of nature. Religion knows, science learns. Everyone chooses for themselves.

This, by the way, doesn't mean that religiosity and a scientific mindset are mutually exclusive. I know several serious scientists who consider themselves religious people, and several believers who are interested in science.When discussing the relationship between science and religion, it's impossible not to touch upon another slippery issue—the ongoing “debate” between evolutionists and creationists. What scares me is the framing of the question itself. Evolution and creationism shouldn't be compared at all because the former is a scientific theory, and the latter is a hypothesis built on faith. They are fundamentally different things, apples and oranges. You can't believe in evolution the way you believe in God—no more than you can believe in gravity. Over the past hundred and some years, we've learned that evolution exists. We have very good factual evidence that anyone who knows how to use the internet can find.

Nonetheless, saying “I believe/don't believe in evolution” is illiterate. It's more accurate to say “I agree/disagree with the theory of evolution”, recognizing that “theory” refers specifically to a scientific theory (not just “one of the opinions”)—the strongest and most enduring of those currently existing. Creationism (or any of its variants, including so-called “intelligent design”) is not a scientific theory—it fails Popper's criterion because it initially includes an irrefutable thesis. A scientific theory, as mentioned above, cannot be irrefutable.

To supplement the above, I'll quote the words of His Holiness John Paul II from his speech dated October 22, 1996.

Today, almost half a century after publication of the encyclical [by Pope Pius XII on evolution], new knowledge has led to the recognition of the theory of evolution as more than a hypothesis. It is indeed remarkable that this theory has been progressively accepted by researchers, following a series of discoveries in various fields of knowledge. The convergence, neither sought nor fabricated, of the results of work that was conducted independently is in itself a significant argument in favor of the theory.

There you have it.

Hieronymus Bosch, "The Tooth Puller, the Bagpipe Player and the Wood Collectors"

5.8 About the Problems

Scienists have many problems, ranging from “we have no money” to "nobody understands us”, just like teenagers. But some problems are more or less constant (“we never have any money!”), while others seem to worsen over time (“people understand us less and less!“). I'm far from the hysteria brewing in the scientific community that humanity is sliding back into the Dark Ages, but I acknowledge that there are troubling trends. These trends, in my view, stem from the fact that most people don't understand what science really is.

All those simple and seemingly obvious things I mentioned earlier are ingrained in the subconscious of scientists and are the most natural ideas in the world to them. They are embedded in the very essence of classical university science education. Even though they're not often explicitly stated, we absorb them with every lab report we submit and every anecdote we hear about the lives of great scientists. This is not the case for most other people, leading to a misunderstanding of how science works, an aversion to it, and the flourishing of charlatans and tricksters of all stripes.

The root of this problem is in the education system. We—and I'm speaking about the entire world, not just any specific country—have forgotten how to teach science in schools. Or, rather, we've failed to adapt. Current methods of teaching science have essentially remained unchanged since the eighteenth century. In recent times (the last 30 years, in my view), two things have happened that have made the old format of school science teaching unacceptable.

First, there's been an acceleration in the development of science. I've mentioned this before, but I'll say it again. It seems to me that anyone who says that science has stalled and is going nowhere is just looking at an empty race track after a slew of cars have whizzed by. It's quite the opposite. Science has accelerated to insane speeds of development. Here's a simple indirect proof, which is also one of the problems. Nowadays (compared to the fifties and sixties, for example), to keep up with scientific progress and the significance of new scientific discoveries in a certain area, one needs to have quite deep and specific knowledge in that area. And the more developed the science, the deeper and more specific that knowledge needs to be. For instance, two biologists (arguably, a relatively young science) from completely different branches of biology might still understand each other without a dictionary, but two mathematicians (arguably, the oldest science) might not always do so. The faster and “further” science moves, the harder it is to keep up with new achievements. Nowadays, some sciences are moving so fast that, from the outside, it seems as if they're standing still. This leads to a lot of arguments on the internet (which is tolerable) and a global alienation of science from the public (which is bad). And old methods of teaching science are no longer able to stop this process.

Second, the internet appeared, and information (or knowledge, if you prefer) lost its intrinsic value. Thirty years ago, a school student could get scientific information from a teacher, a parent, a textbook, or maybe a popular science magazine. And that was pretty much it. In each of these cases, knowledge was sifted through a very fine sieve, and what reached the student was relatively correct and reasonable. Now, getting information is not a problem at all. What's become more difficult—and more important—is to distinguish the wheat from the chaff, to differentiate “good” knowledge from “bad”. This is what modern science teaching should focus on: what science is, why it's needed, what it deals with, what methods it uses, how it differs from non-science, etc. At least in the early stages. All these things need to be understood before one starts digging into the actual sciences—physics, chemistry, biology, etc. And it needs to start in school, ideally in the first grade.

5.9 What Can I Do?

Here we are, arriving at the main question. How do we fix what's not exactly broken but simply outdated? How do we upgrade an old horse to run on a high-speed highway?

I have two solutions. One is decent but unlikely. The other is so-so but quite achievable.

Let's start with the first one. A new subject is introduced in schools. To avoid scaring the kids with the daunting term "philosophy," it's called "history of science" or, even better, "culture of science." It would cover everything I've discussed above. The principles of how science works would be explained. Famous experiments, their objectives, and what they demonstrated would be discussed. Some of them even demonstrated—science is experimental, after all. Biographies of great scientists would be detailed not just as a small box next to their portrait in a physics textbook but more comprehensively. After all, science is a very personal endeavor, and a lot can be learned about science itself from the lives of scientists. This shouldn't be a heavy, burdensome subject—an hour a week would suffice. Scientific principles are very simple—even a first-grader can grasp that “trust is assumed, but tested”, as Daniel Dennett has put it. But they need to be ingrained in us as deeply as multiplication tables or table manners.

Moving on, terms like “demarcation” could be saved for the upper grades, along with Popper, Kuhn, and other bores. But teaching the basics of dealing with large volumes of information, distinguishing “good” information from “bad”, as early as possible is essential. The ability to draw conclusions from experiments, to soberly assess results, all these skills are important not just in science but in life overall. Basics of experimental logic, learning from hard-earned mistakes, etc. We learn these things one way or another, and then they are called “experience” or “life’s wisdom”, but teaching them in school wouldn't hurt. After all, there are plenty of less important subjects.

Of course, this option is utopian. It requires teachers who understand and love science. It needs funding, textbooks, government approval. It needs reform, and good will, and long-term planning. It needs sensible, enthusiastic people. And they're hardest to come by.

The second solution is simpler and more complicated at the same time. Simpler because it's feasible. More complicated because it's not up to some unknown enthusiasts or a distant government, but up to me and you. Specifically, you, since I'm already “in the game”. It's straightforward—take an interest in science. Read a popular science book. Read a scientist's biography; they're often quite amusing. Try Feynman, Hawking, Sagan. Flip through "Scientific American" or "National Geographic." The pictures are very pretty. Try to look a bit deeper than just the images, try to understand the mechanisms these pictures conceal. Spend twenty minutes on Wikipedia. It's not as boring as it might seem at first glance. It's an exploration, an adventure. Science is staggeringly, infinitely, unimaginably beautiful—from the electronic grid to the spiral of a galaxy. Take an interest in science. Learn more.

Then tell your friends. Or, more importantly, your children.

It might seem silly, even naive, but it's not. Next time, during a break, talk about something interesting from the world of science, not from sports or pop stars. Only talk about what interests you, don't turn the conversation into a tedious lecture. And don't make anything up. Just share news, tell a story.

In one of his interviews, Nobel laureate Andre Geim said it best: “Science is part of culture”. This phrase deserves to be placed next to the “Hollywood” sign in LA, so that everyone can see it. A cultured person doesn't need to know the composition of the paints Raphael used, but they will derive immense pleasure from the “Sistine Madonna”. And they will likely distinguish it from non-artistic scribbles. The same goes for science. The ability to enjoy it and to distinguish scientists from charlatans are signs of a cultured person.

This won't solve all problems, of course. But it definitely won't hurt. And that's quite something.

5.10 Conclusion

Let's return, for a moment, to the reasons I started writing this piece. Charlatans have always existed, and there have always been many of them. Fighting their host is almost futile. But it's entirely realistic to fight their influence on people. How easily we fall for their tricks, how many people believe them, and how much money they steal from us—that's our responsibility. Personal. Each of us. For ourselves and for our loved ones. The importance of a healthy, critical view of new information, including scientific information, cannot be overstated.

Is science a preserver of knowledge, or is it a gatekeeper? It is both, and both are necessary. It preserves and multiplies our knowledge about the world we live in, and it gatekeeps the truth from contamination. Science, with its rituals and its limitations, is a truth filter, one of the tools humanity have invented to pick the wheat from the chaff. And as such, it is still the best tool we have.

Of course, every filter has its efficiency range, and every filter has its failings. There are both false positives and false negatives. Arguably, the former still cause more damage; this is why I concentrated on charlatans and not on unsung geniuses. We have to remember that, even though some of the charlatans slipped through the cracks, most didn’t.

I hope this article has clarified some things for some people. Science is, of course, complex, but not as complex as it's made out to be. It requires thought and understanding, but it's not exclusive or for the chosen few. It's attentive to details and often pedantic, but not didactic or condescending. It might seem prideful and demanding, but its pride is deserved, and its demands are achievable. And, most importantly—it's very, very beautiful.

If you’re still reading this, thank you for bearing with me. The first version of this text (including previous parts) was written in 2012, when I was still pursuing an academic career, and it represents positive and optimistic views on science that I try to uphold even today. Some day I might write another one, from a more jaded and cynical viewpoint, discussing various ails that trouble scientific systems around the world, but for now this will stand.

Best,

K.

Du hast mit Deiner Liste die Entwicklung von Technologie abgeliefert, nicht von Wissenschaft

Deshalb ergibt eine lange Liste wissenschaftl. Betrüger keine Einsicht in das Wesen von Wissenschaft